4 Data Trends That Will Digitally Transform the 2020s

-

Dawn Pennington

Dawn Pennington

- |

- Reality Check

- |

- August 11, 2020

Dear Member,

The coronavirus has brought massive changes to the way consumers and companies interact. We find ourselves in a new, more digitized economy.

This revolution was already on its way. It just arrived earlier than many expected because of the pandemic.

And now, there's no turning back.

That's good news for all kinds of transformational technologies that have been waiting in the wings for their time to shine. Things like high-speed networks, connected devices, and artificially intelligent everything aren't just possible. Now, they're imminent.

The great news is that many of these trends are as unstoppable as they are investable.

Where do you start? With the one thing that makes them all possible…

Data: The Backbone of Digital Transformation

In 1980, IBM produced the first 1 gigabyte capacity disk drive. It weighed over 500 pounds and cost $40,000.

Fast-forward to the appearance of Windows 95. Computers still set you back $700 to $3,000. But at the higher end of that range, you could get a 1 gigabyte hard drive that fit in a personal computer.

At the time, it was hard to fathom filling up all that space. We weren’t backing up thousands of emails, photos, and favorite songs.

Today, you can fit a 128-gigabyte flash drive easily in your pocket, and it will only set you back $18.

How Do You Measure Change? In Zeroes (21 of Them!)

The new reality is that a gigabyte is nothing these days. We moved on to the terabyte, which is 1,000 gigabytes. This is about as far as we go when talking about personal computers.

But these days, collecting data (including our personal data) is equivalent to mining gold in the 1800s. So, a terabyte won’t suffice. We moved to the petabyte, which is 1,000 terabytes. Then the exabyte, which is 1,000 petabytes. And finally to the zettabyte, which is 1,000 petabytes.

Of all the numbers we're talking about today, this is the ONE we want you to remember…

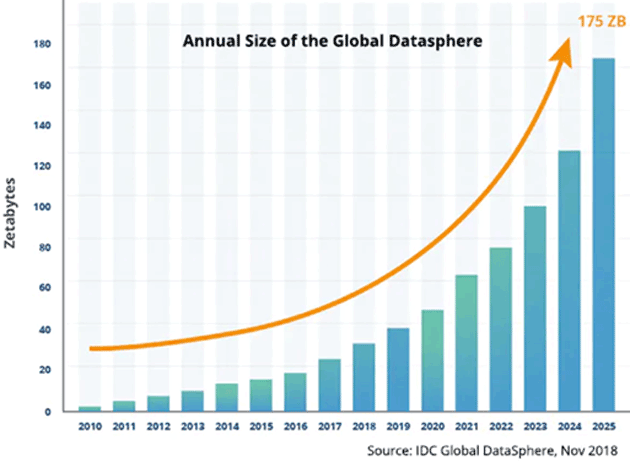

The global datasphere is expected to reach 175 zettabytes by 2025.

Source: Seagate

That’s the number 175 with 21 zeros after it... the equivalent of 175 trillion gigabytes.

Cisco Systems (CSCO) calls this the "Zettabyte Era." Here's what one of its analysts, Taru Khurana, said that could physically look like:

"If each Gigabyte in a Zettabyte were a brick, 258 Great Walls of China (made of 3,873,000,000 bricks) could be built."

That's huge… and getting bigger. Data scientists already have a name for 1,000 zettabytes: a yottabyte.

In other words, big data doesn't sleep. It snowballs.

For investors, that means…

The Bigger the Data, The Bigger the Opportunities

It really can be compared to the Forty-Niners invading California during the Gold Rush.

This time, it's companies rushing to collect—and analyze—as much data as they can. That's because data equals money.

The term "big data" refers to data whose size or type is beyond the ability of traditional databases. It’s classified by the three Vs:

- High volume,

- High velocity, and

- High variety.

These are massive collections of sensor readings, network statistics, log files, social media posts… anything and everything being funneled into storage rapidly and in real time.

The concept of massive amounts of data being valuable is nothing new. Even in the 1950s, massive amounts of data were compiled into spreadsheets for analysis. Then in the 1970s, the biggest companies with the most money could afford room-sized computers to minimize analysts' time.

More recently, big data was only available to the largest companies with the biggest technology budgets.

But that is all changing with the adaptation of artificial intelligence.

Data is only useful once it is analyzed. And it can only be analyzed after being turned into structured data.

Data scientists spend 50% to 80% of their time collecting and preparing data for use. By using AI and machine learning to streamline the process, data scientists can focus more on making their conclusions.

What we’re seeing now is a complete change in how all this data is handled.

It’s partly because of an exponential increase in the size of the global datasphere, and partly because the technology is finally catching up.

4 Digital Trends That Are Already Transforming Our World

The adoption of 5G plays a big part in reducing latency and increasing speed for the handling of this data and the related processing.

This is resulting in explosive demand for certain areas of the technology sector.

Here are a few of those trends that we’ll be following closely here in Reality Check:

Machine Learning is a specific subset of AI that trains machines.

Yes, it trains AI how to learn and adapt. This development is useful in the sorting and analysis of data, and in the implementation of the conclusions.

Without the latency of human-driven decisions, efficiency can be at the forefront.

Data Management refers to the process of organizing and sorting data.

This process has to be repeatable and adaptable. Machine learning will no doubt be a part of this task. But at the base of this will be software and open-source code.

Data Mining is the process by which all this massive data is collected

It has to allow for chaotic and repetitive data to be identified and ignored. Only the highest-quality data gets moved to the data management phases. This will usually include a separate process for each type of data that is collected.

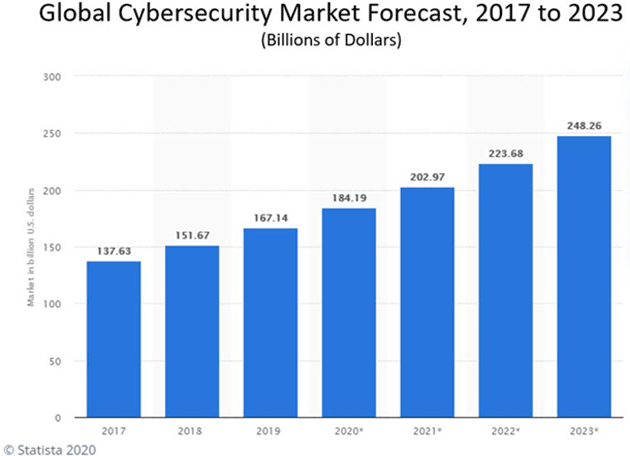

Data & Cybersecurity protect both the data and the network.

This last one alone has been growing rapidly over the past few years…

Source: Statista

Protecting data against cybercrime is set to be a $250 billion industry by 2023.

Here, we're talking about more than your computer zapping some malware that gets downloaded to it. Some of today's cyber-defense programs can notice a SQL query that takes a few milliseconds too long on a server. This can help companies and individuals identify a potential attack as it begins.

It's no wonder companies are pouring billions of dollars into all aspects of big data. Management teams everywhere want to:

- Reduce production costs and improve efficiency with faster and better decision-making.

- Develop new products and services around what consumers actually want and need.

- Recommend products in real time based off of browsing history or by the customer answering a few questions.

Data collection, analysis, and deployment is an important trend that’s like a runaway snowball traveling down a steep incline. It’s picking up more and more momentum as it rolls.

Tell us: We're talking about big data because it's bigger than any one industry or company. Where do you think it can make the biggest difference? What transformational technologies are you eager—or perhaps anxious—to see? Send us an email here.

There are already several companies that are standing out … and ways for investors to start profiting as well. Stay tuned.

Dawn Pennington

Dawn Pennington